Why transcription accuracy doesn’t matter as much as you think

Software vendors want you to believe that transcription accuracy is make or break for the success of your Auto-QA project. But you might be surprised by what the analytics experts think.

Speech analytics suppliers are always racing to claim the highest transcription accuracy, ultimately hoping to persuade you to pay for those extra percentage points. But is it worth everything they say?

We caught up with two analytics experts – our Speech Analytics Lead, Paula Shaw, and David Naylor, Analytics PhD and founder of Humanotics. In this article, they explain:

Consider it a reality check.

In the contact centre, speech analytics takes calls and transcribes them from audio into text to be used for insights and quality assurance.

Transcription used to be powered by millions of lines of code,” says Paula. “But, in 2016, Google swapped it for a machine learning model and their accuracy shot through the roof overnight – by 60%, to be precise.

Since then, transcription keeps getting better, with contact centre speech analytics being trained using call audio and a wide variety of accents. It’s not uncommon to see vendors claiming 95% transcription accuracy. What they won’t tell you is that the level of accuracy you need in practice is often much lower – and that the accuracy of your first transcripts rarely initially measure up to the sales pitch, due to software being demonstrated under optimal and limited settings. It will need to be fine-tuned; but only to the point that it gives the results you need…

Enjoying the real talk about analytics software? We think you’d like our article on avoiding the analytics snake oil.

As a general rule, transcriptions should be accurate enough that they make sense when you read them. Telephone conversations are always compressed and background noise on both sides is common. Word-for-word transcription would require an unachievable level of audio quality and significant storage space plus extreme effort and time spent fine-tuning. Even where recording quality is high, if accuracy is paramount (such as in court proceedings), then human transcription is essential.

It’s important to consider why you are transcribing your audio. In the contact centre, this can be for a variety of speech analytics use cases , from tagging calls to generate insight, through to augmenting the Quality Assurance process. David explains that building your queries to require perfect transcription amounts to considerable effort without much reward.

“No matter how good your transcription software is, there will always be inaccuracies,” says David. “Perhaps the machine picked up background noise or the agent spoke too fast. Instead, you’ll want to measure whether the key words and phrases are there and in the right order, rather than searching for word-for-word transcription.”

This is because when you build topic queries, you will be looking for the keywords; not the connecting words or inconsequential words/phrases surrounding them. For example, if you wanted to check that agents are reading out the following disclaimer;

This policy is for planned treatment only, emergency admissions to A&E are not classified as planned”

Then your query might look like this:

“Planned treatment” OR “plan treatment” THEN “emergency admission” OR “admissions A and E” THEN “not classified” OR “not classed”.

Building out queries in this way means your analytics is flexible enough to register the presence of a compliance statement even if the agent phrases it slightly differently. The relevance to transcription accuracy is that it doesn’t require the entire sentence to be transcribed word for word – only the keywords.

The percentage accuracy is calculated as:

Accuracy = (100 – Word Error Rate)%

So if your transcription is 90% accurate, there will be an average of ten mistakes every 100 words. Errors can be deletion of words, insertion of incorrect words, or substitutions (e.g. cat instead of chat).

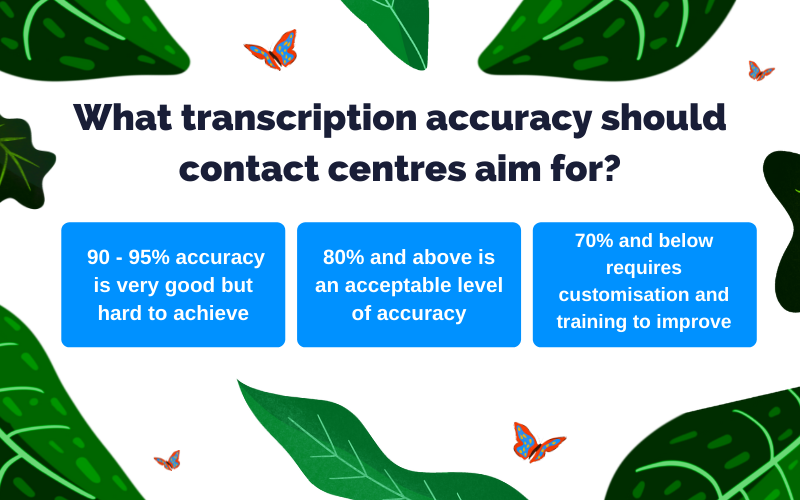

As a general rule, contact centres only need to aim for acceptable or good transcription accuracy. Anything below 70% accuracy requires improvement. Above that, it is more important to focus on the detection of high-value words, rather than overall accuracy.

“You still want to be up there at the 80% plus mark,” says David. “But you don’t necessarily need to take it to the next level – you can instead build topic queries that take inaccuracies into account.”

“You want to reach a baseline of human readability,” explains Paula. “After that, it all comes down to the fine tuning. It depends what area of business you are in and which words you need to look out for.”

“Transcription accuracy is a means to an end. We’re not trying to make the software understand the conversation – it couldn’t actually do that if you had 100% accuracy but that’s another misconception for another blog . The aim is to get your queries functioning accurately so that you can get the results you need to drive the particular use case of the transcription.”

“No matter which transcription engine you use, you will never achieve 100% accurate transcription of a phone call,” says David. “And you don’t need to.”

“Plus, the transcription accuracy is only as good as the audio input,” agrees Paula. “What you need is a provider who will work with you to get an accurate output, even if the input is slightly imperfect.”

When you get your speech analytics out of the box and put it into action, the percentage accuracy will need some tweaking. Transcription engines are developed with general conversations in mind and are trained under optimal conditions.

In the real world, speech analytics has to deal with:

Some aspects can be addressed by fine-tuning (tips below), whereas others are best tackled with good query design (more on this in our Analytics Playbook ).

“Ideally, any speech analytics should come with a trial where the provider helps you explore the potential for improving your transcription accuracy,” says Paula.

“Absolutely,” agrees David. “At EvaluAgent, we would always make sure the audio is good enough for us to provide a quality service. After that, we help clients to get the transcription as accurate as possible. There are two parts to this journey – improving transcription and adjusting the topic queries, which are the phrases your software searches for to flag certain call types.”

“To improve the transcription, it’s not just about identifying the errors but also having a broad picture of why something might be transcribed incorrectly,” adds Paula – leading us on to some practical tips for improving transcriptions.

Not all noises are controllable – some agents may have children, pets, or nearby construction works – and agents mustn’t come under undue stress about their environment. However, it is useful to review working conditions and provide support to protect against excessive volume during calls.

“Background noise has always been a challenge for speech analytics,” says David. “Working from home presents new types of interference, but even before the pandemic, transcription was sometimes up against extremely noisy contact centres. Having a buzzing shop floor was a way to boost team energy.”

“Sometimes it’s simply a case of making your team aware of how noise influences the transcription,” says Paula “On the shop floor, for example, it can help to remind people of the potential impact their speaking volume has on their colleagues either side of them.”

Luckily, accurate transcription doesn’t require crystal clear conversations – but it is helpful to review calls for interference and audio drop out to determine whether it’s an internal or external issue.

“While we’re doing this interview now, I’m working from home – if I didn’t have my headphones, the transcript could easily pick up background noise; I have a husband, a dog, a parrot, so there’s a lot of potential for interruption,” says Paula. “Background noise interferes with speaker separation and can sometimes lead to the transcription engine to assign words to the wrong speaker.”

“In most cases, even if there’s a problem, we wouldn’t usually need to go down the route of replacing all the agent headphones,” highlights David. “There’s a lot we can do by working with the core recording platform to improve the quality of the transcriptions.”

For a detailed breakdown of optimal file sizes, compression, bit rates and sample sizes, refer to our Analytics Playbook.

Stereo recordings, or split audio recordings are when each speaker is assigned to their own channel, so that the voices are on two separate audio waves (left and right). Mono recordings assign both speakers to a single channel to produce one audio wave.

“Today, most recording systems have stereo capability and you can easily change the setup by talking to your provider,” says David. “Whether you choose to use it or not is a question of cost. Split channel and low compression audio is higher quality but costs more to store (because the files are larger). Those costs should be relatively small, though, and while there is software that tries to separate speakers by distinguishing pauses and voices, it’s not as accurate.”

In the real world, everyone speaks differently and this can present challenges to transcription. Common obstacles include language barriers, accents, pronunciation, speech impediments and behaviours such as rushing or mumbling. Many of these can be addressed by training the language model, but training your agents too will give you the best foundation to build on. After all, speech analytics is a tool to help improve the quality of conversations – if the software has trouble understanding an agent, it’s likely that some customers will too!

“Some transcription errors can be addressed by giving agents feedback on clear and accurate communication,” says Paula, “or it might be a case of shifting their focus to resolving contacts rather than reducing average handle time, which can cause people to rush.”

“Transcription engines are trained to recognise a whole range of different conversation types,” says David. “What they’re not trained to recognise, necessarily, are the brand names, product names, or specialist language.”

From the outset, you will need to boost the importance of certain words and train the language model to recognise them. This can be as simple as providing a text based list of booster words but some models can be trained on the sounds of those new words as well.

“You’re looking to increase the focus on keywords, which are the ones that have the biggest impact on your outcomes,” says Paula

Phrases for your booster list might include:

As well as boosting words and phrases, you can also reduce the importance of some terms, such as those that may be used but are not relevant to your operations.

Addressing the differences in how people speak (and what they say vs what they mean) is a double-pronged process that improves the end result given by your speech analytics.

“People from Scotland, for instance, will speak very differently from people in the Northwest,” says Paula. “It’s not just about accents, but dialect and idioms. For example, someone might say ‘you must be having a laugh’ rather than ‘I’m very disappointed about this.’”

“Again, there are two parts to this – the transcription itself, and the queries,” says David. “When it comes to transcription, it helps to make sure the engine you use has broad capabilities in your language. For example, some models are trained in UK English, some US English, and others claim to support Global English covering all different accents.”

“The universal phoneme set considers the different sounds out of people’s mouths and whether it equates to the same word,” explains Paula. “We can use this to feed into the language model and account for the way people speak. On the other hand, differences in regional phrases can be addressed by adding to your query groups.”

“Over time, as new generations emerge as customers, speaking habits will change, as well as products and services. So that will mean the approach to raising a query will evolve. It’s not just a case of getting your transcription and queries right the first time, but committing to continuous improvement, to keep that accuracy as high as possible” says Paula.

“Make sure you plan this maintenance periodically, the timing of which will depend on how fast-paced your business is,” adds David.

Transcription accuracy is important to a certain level – but after that, it’s what you do with it that makes the difference to your contact centre. Rather than fixating on percentage points accuracy, focus on getting accurate insights, and using them to improve performance.